* support skip layer guidance (slg) for stable diffusion 3.5 medium * Tweak the comments formatting. * Proper error message. * Cosmetic tweaks. --------- Co-authored-by: Laurent <laurent.mazare@gmail.com>

candle-stable-diffusion-3: Candle Implementation of Stable Diffusion 3/3.5

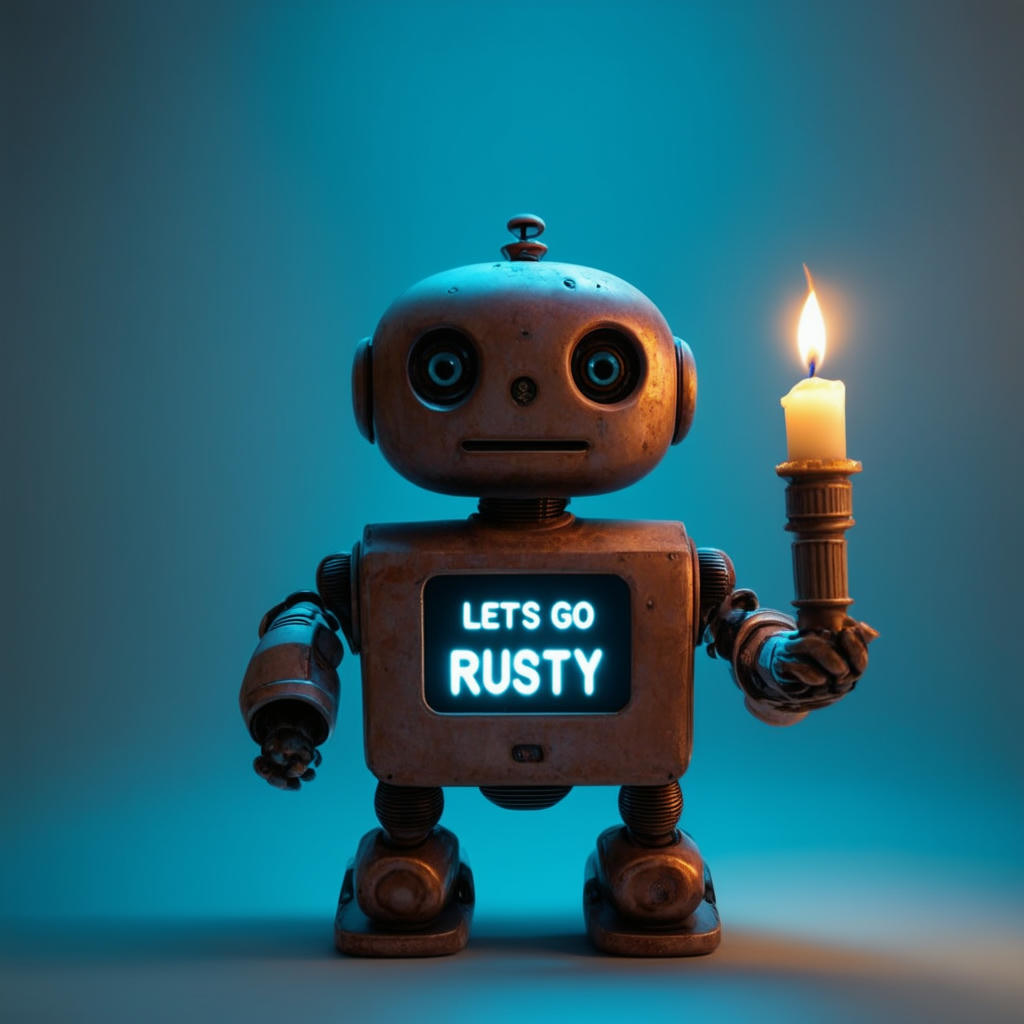

A cute rusty robot holding a candle torch in its hand, with glowing neon text "LETS GO RUSTY" displayed on its chest, bright background, high quality, 4k, generated by Stable Diffusion 3 Medium

Stable Diffusion 3 Medium is a text-to-image model based on Multimodal Diffusion Transformer (MMDiT) architecture.

Stable Diffusion 3.5 is a family of text-to-image models with latest improvements:

It has three variants:

- Stable Diffusion 3.5 Large @ 8.1b params, with scaled and slightly modified MMDiT architecture.

- Stable Diffusion 3.5 Large Turbo distilled version that enables 4-step inference.

- Stable Diffusion 3.5 Medium @ 2.5b params, with improved MMDiT-X architecture.

Getting access to the weights

The weights of Stable Diffusion 3/3.5 is released by Stability AI under the Stability Community License. You will need to accept the conditions and acquire a license by visiting the repos on HuggingFace Hub to gain access to the weights for your HuggingFace account.

To allow your computer to gain access to the public-gated repos on HuggingFace, you might need to create a HuggingFace User Access Tokens (recommended) and log in on your computer if you haven't done that before. A convenient way to do the login is to use huggingface-cli:

huggingface-cli login

and you will be prompted to enter your token.

On the first run, the weights will be automatically downloaded from the Huggingface Hub. After the download, the weights will be cached and remain accessible locally.

Running the model

cargo run --example stable-diffusion-3 --release --features=cuda -- \

--which 3-medium --height 1024 --width 1024 \

--prompt 'A cute rusty robot holding a candle torch in its hand, with glowing neon text \"LETS GO RUSTY\" displayed on its chest, bright background, high quality, 4k'

To use different models, changed the value of --which option. (Possible values: 3-medium, 3.5-large, 3.5-large-turbo and 3.5-medium).

To display other options available,

cargo run --example stable-diffusion-3 --release --features=cuda -- --help

If GPU supports, Flash-Attention is a strongly recommended feature as it can greatly improve the speed of inference, as MMDiT is a transformer model heavily depends on attentions. To utilize candle-flash-attn in the demo, you will need both --features flash-attn and --use-flash-attn.

cargo run --example stable-diffusion-3 --release --features=cuda,flash-attn -- --use-flash-attn ...

Performance Benchmark

Below benchmark is done with Stable Diffusion 3 Medium by generating 1024-by-1024 image from 28 steps of Euler sampling and measure the average speed (iteration per seconds).

candle and candle-flash-attn is based on the commit of 0d96ec3.

System specs (Desktop PCIE 5 x8/x8 dual-GPU setup):

- Operating System: Ubuntu 23.10

- CPU: i9 12900K w/o overclocking.

- RAM: 64G dual-channel DDR5 @ 4800 MT/s

| Speed (iter/s) | w/o flash-attn | w/ flash-attn |

|---|---|---|

| RTX 3090 Ti | 0.83 | 2.15 |

| RTX 4090 | 1.72 | 4.06 |