* onnx: fix pad, unsqueeze both implementations have off-by-one errors: - Pad 'reflect' cycle for eg `dim==3` is `[0,1,2,1]` which has length of 4 (or `dim*2 - 2`) not 5 (current code `dim*2 - 1`) - Unsqueeze(-1) for tensor with `dim==3` should be 3 (ie `dim+index+1`) not 2 (ie currently `dim+index`) in addition, Pad is incorrectly calculating the starting padding. If we want to pad out 2 elements to the start, and we have this cycle of indices of length 6, then we should skip 4 elements, but currently we skip 2. A more visual representation of what's going on is below: ``` pad_start: 2 data: [a,b,c,d] indices: [0, 1, 2, 3, 2, 1, 0, 1, 2, 3, 2, 1, 0, ..] // zigzag between 0..4 actual: skip [ c d| c b a b] expected: ~ skip ~ [ c b| a b c d] ``` The values between `[` and `|` are padding and the values between `|` and `]` in the example should match the original data being padded. * Fix clippy lints. --------- Co-authored-by: Laurent <laurent.mazare@gmail.com>

candle-stable-diffusion: A Diffusers API in Rust/Candle

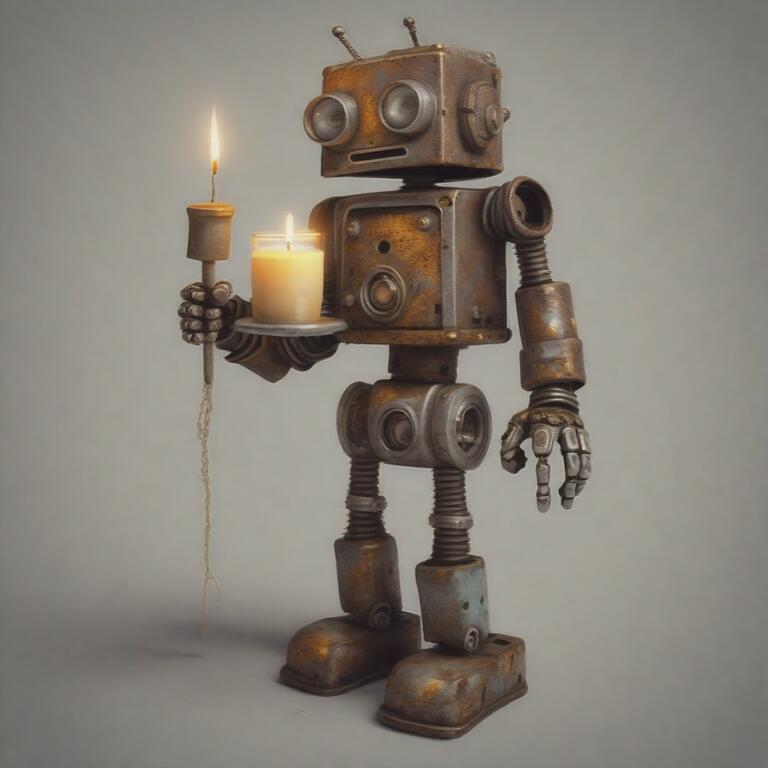

A rusty robot holding a fire torch in its hand, generated by Stable Diffusion XL using Rust and candle.

The stable-diffusion example is a conversion of

diffusers-rs using candle

rather than libtorch. This implementation supports Stable Diffusion v1.5, v2.1,

as well as Stable Diffusion XL 1.0, and Turbo.

Getting the weights

The weights are automatically downloaded for you from the HuggingFace

Hub on the first run. There are various command line

flags to use local files instead, run with --help to learn about them.

Running some example.

cargo run --example stable-diffusion --release --features=cuda,cudnn \

-- --prompt "a cosmonaut on a horse (hd, realistic, high-def)"

The final image is named sd_final.png by default. The Turbo version is much

faster than previous versions, to give it a try add a --sd-version turbo flag,

e.g.:

cargo run --example stable-diffusion --release --features=cuda,cudnn \

-- --prompt "a cosmonaut on a horse (hd, realistic, high-def)" --sd-version turbo

The default scheduler for the v1.5, v2.1 and XL 1.0 version is the Denoising Diffusion Implicit Model scheduler (DDIM). The original paper and some code can be found in the associated repo. The default scheduler for the XL Turbo version is the Euler Ancestral scheduler.

Command-line flags

--prompt: the prompt to be used to generate the image.--uncond-prompt: the optional unconditional prompt.--sd-version: the Stable Diffusion version to use, can bev1-5,v2-1,xl, orturbo.--cpu: use the cpu rather than the gpu (much slower).--height,--width: set the height and width for the generated image.--n-steps: the number of steps to be used in the diffusion process.--num-samples: the number of samples to generate iteratively.--bsize: the numbers of samples to generate simultaneously.--final-image: the filename for the generated image(s).

Using flash-attention

Using flash attention makes image generation a lot faster and uses less memory.

The downside is some long compilation time. You can set the

CANDLE_FLASH_ATTN_BUILD_DIR environment variable to something like

/home/user/.candle to ensures that the compilation artifacts are properly

cached.

Enabling flash-attention requires both a feature flag, --features flash-attn

and using the command line flag --use-flash-attn.

Note that flash-attention-v2 is only compatible with Ampere, Ada, or Hopper GPUs (e.g., A100/H100, RTX 3090/4090).

Image to Image Pipeline

...

FAQ

Memory Issues

This requires a GPU with more than 8GB of memory, as a fallback the CPU version can be used

with the --cpu flag but is much slower.

Alternatively, reducing the height and width with the --height and --width

flag is likely to reduce memory usage significantly.