| conf | ||

| docs | ||

| src | ||

| .dockerignore | ||

| .env.sample | ||

| .gitignore | ||

| .prettierrc | ||

| Dockerfile | ||

| entrypoint.sh | ||

| LICENSE | ||

| package-lock.json | ||

| package.json | ||

| README.md | ||

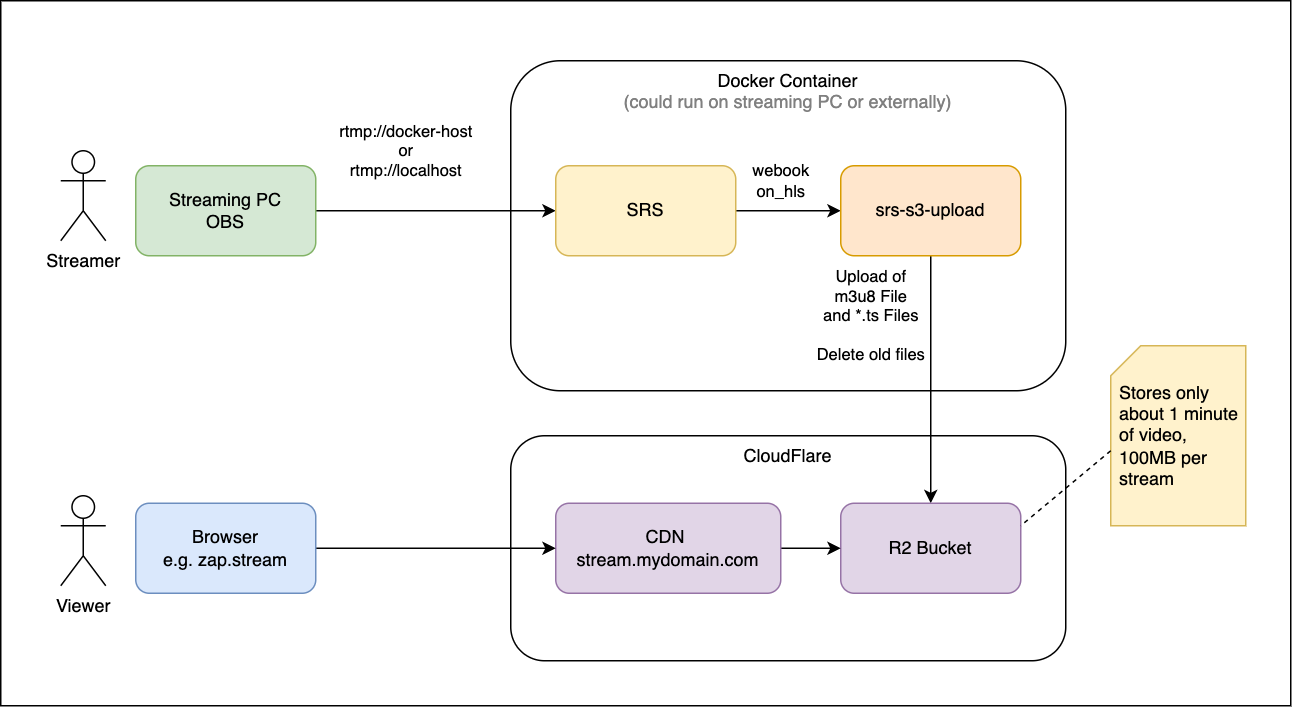

Overview

This project allows upload of HLS based streaming data from the SRS (Simple Realtime Server, https://ossrs.io/) to an S3 based storage. The purpose is to publish a stream in HLS format to a cloud based data store to leverage CDN distribution.

Why would you use this over other solutions?

- For a single streamer you can operate within the free tier of Cloudflare.

- You can leverage a CDN and can potentially reach many viewers (not tested much)

- If you run the docker (srs + upload tool) on your local machine, there is no external compute resources needed.

This project implements a NodeJs based webserver that provides a web hook that can be registered with SRS's on_hls webhook. Whenever a new video segment is created, this web hook is called and the implementation in this project uploads the .ts video segment as well as the .m3u8 playlist information

to the storage bucket.

To keep the bucket usage limited to a small amount of data, segments before a certain time frame (e.g. 60s) are automatically deleted from the bucket.

Configuration

This guide describes how to build the following setup based on Cloudflare's storage and CDN:

Prerequisites

What you need:

- A Cloudflare Account

- A Domain Name that you can use with CloudFlare (change the nameservers).

- A Host with Docker can be localhost or external

Cloudflare Setup

This configuration assumes that Cloudflare is used as an storage and CDN provider. Generally any S3 compatible hosting service can be used.

-

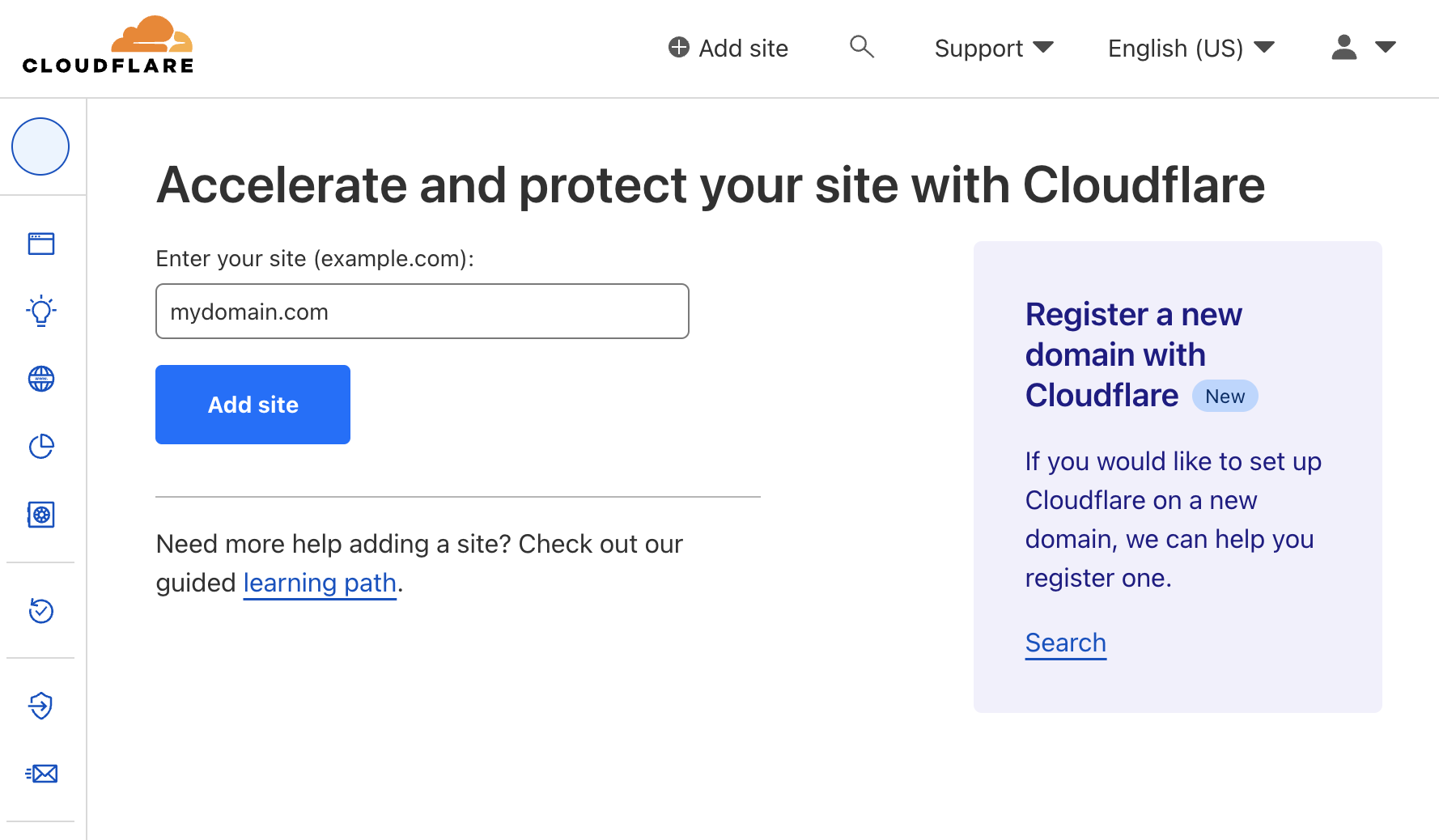

Create a new website for your domain. The setup of the Domain is not part of this guide. Alternatively you can also register a domain with Cloudflare. Your own domain is not absolutely necessary but it enabled you to use the CDN.

-

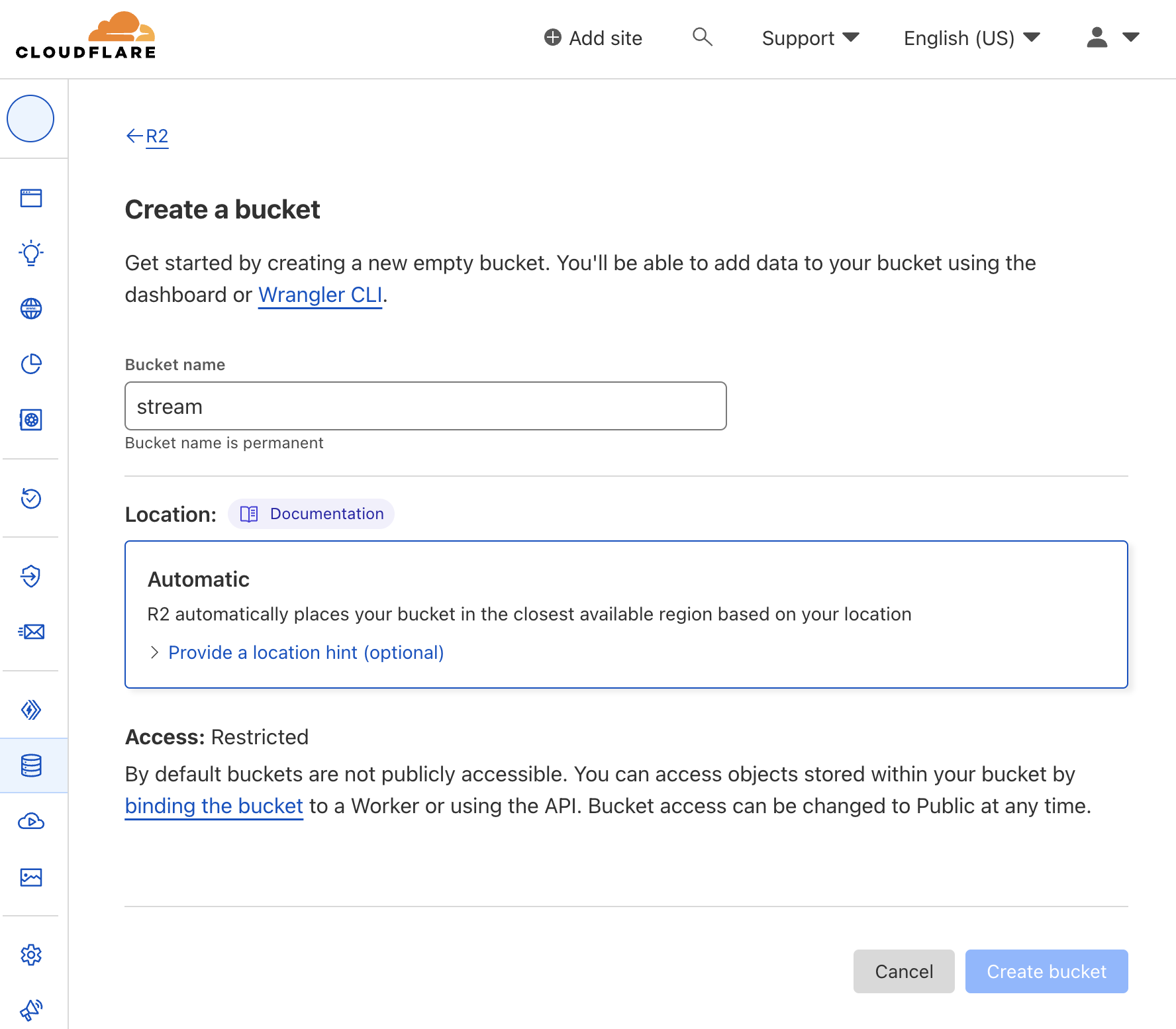

Then set up a Cloudflare Bucket, e.g.

stream -

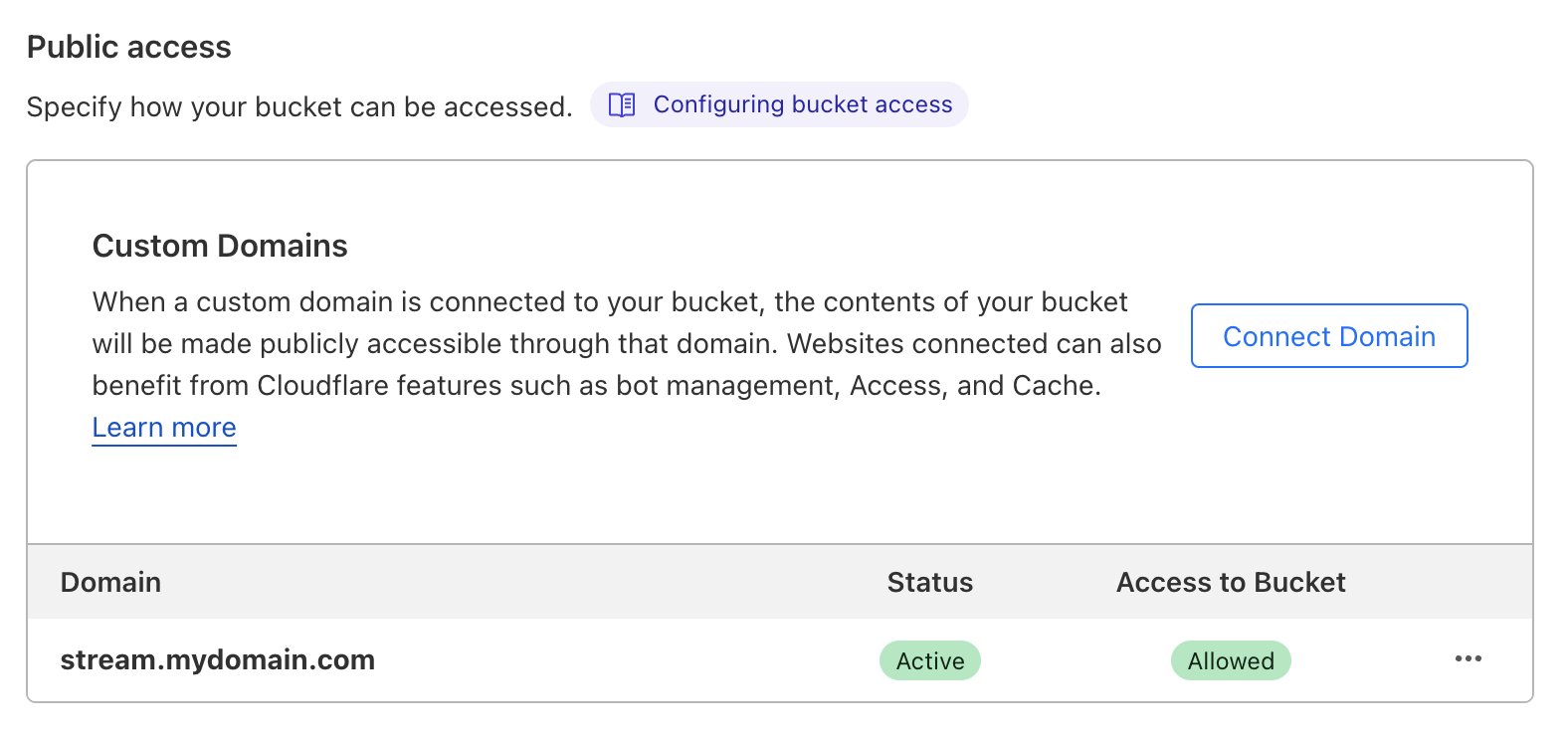

Make the bucket publicly accessible, by connecting a domain name, e.g. connect

stream.mydomain.com. The contents of the bucket will then be available under this domain name. -

When you have connected a domain name and the proxy setting is selected in CloudFlare all read access is automatically cached by the CDN.

-

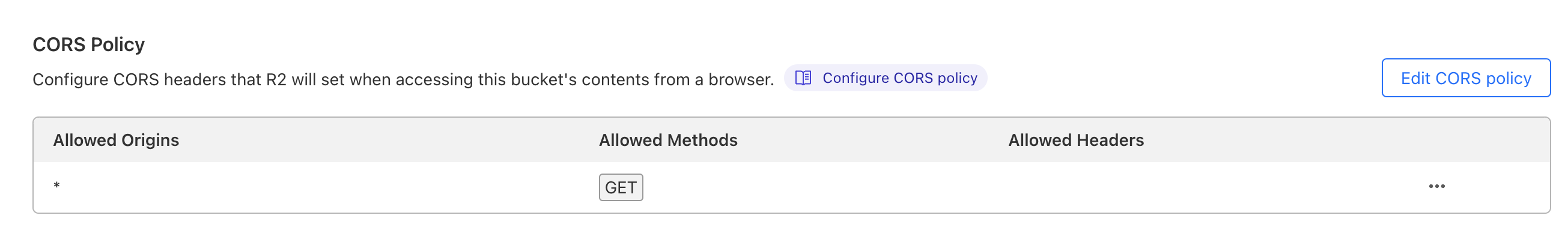

To be able to include the contents in a streaming website, e.g.

zap.stream, set the CORS settings of the bucket to allow any website to use your stream, i.e. host* -

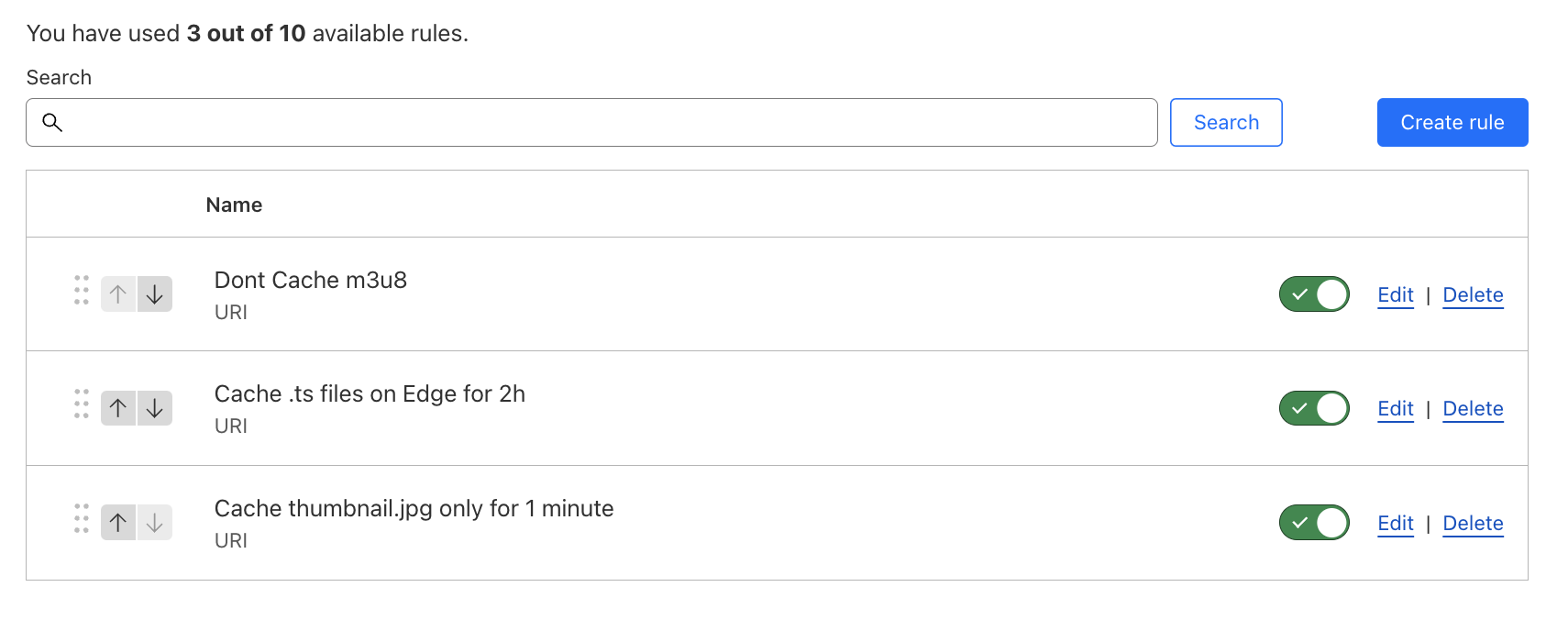

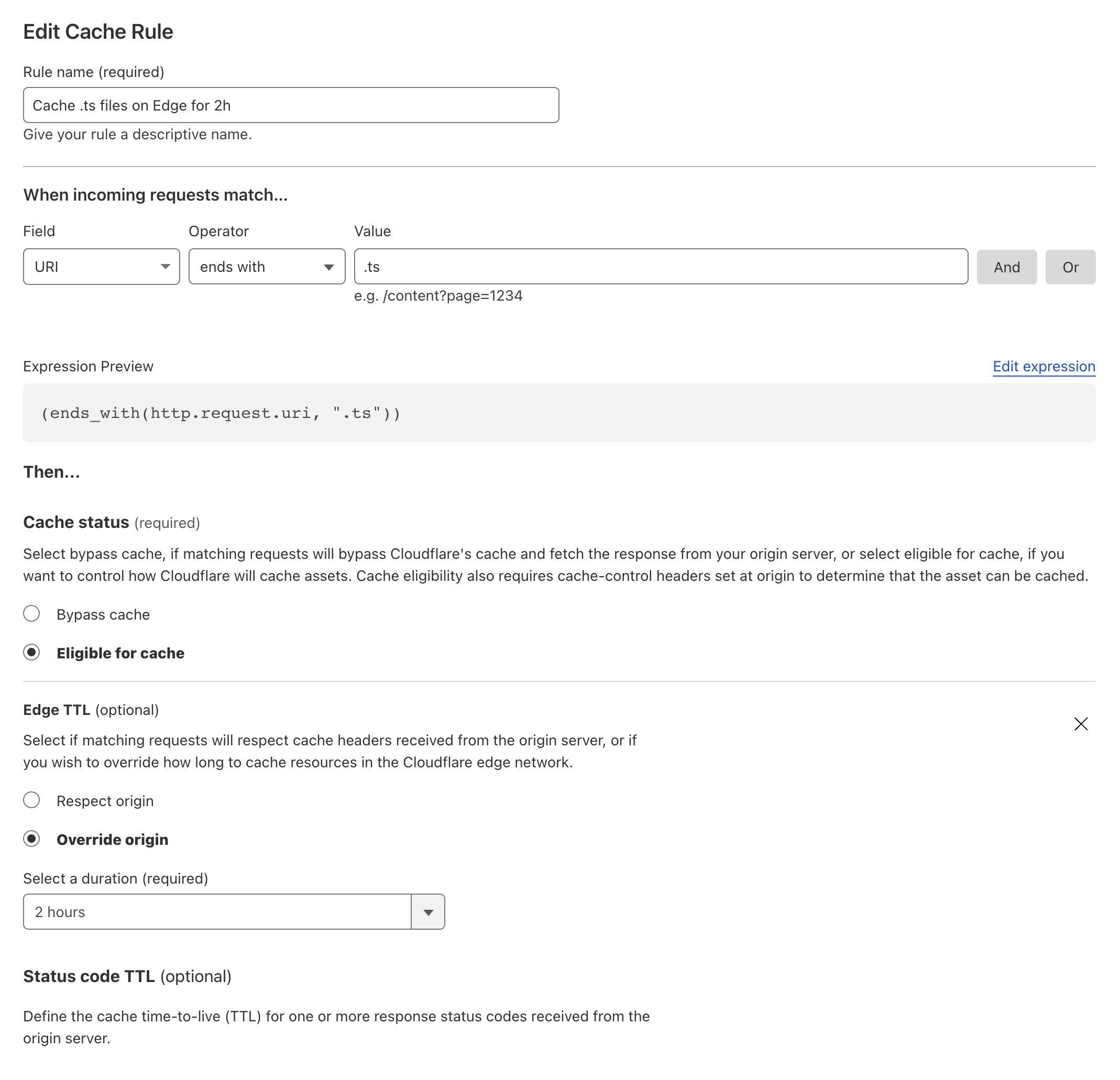

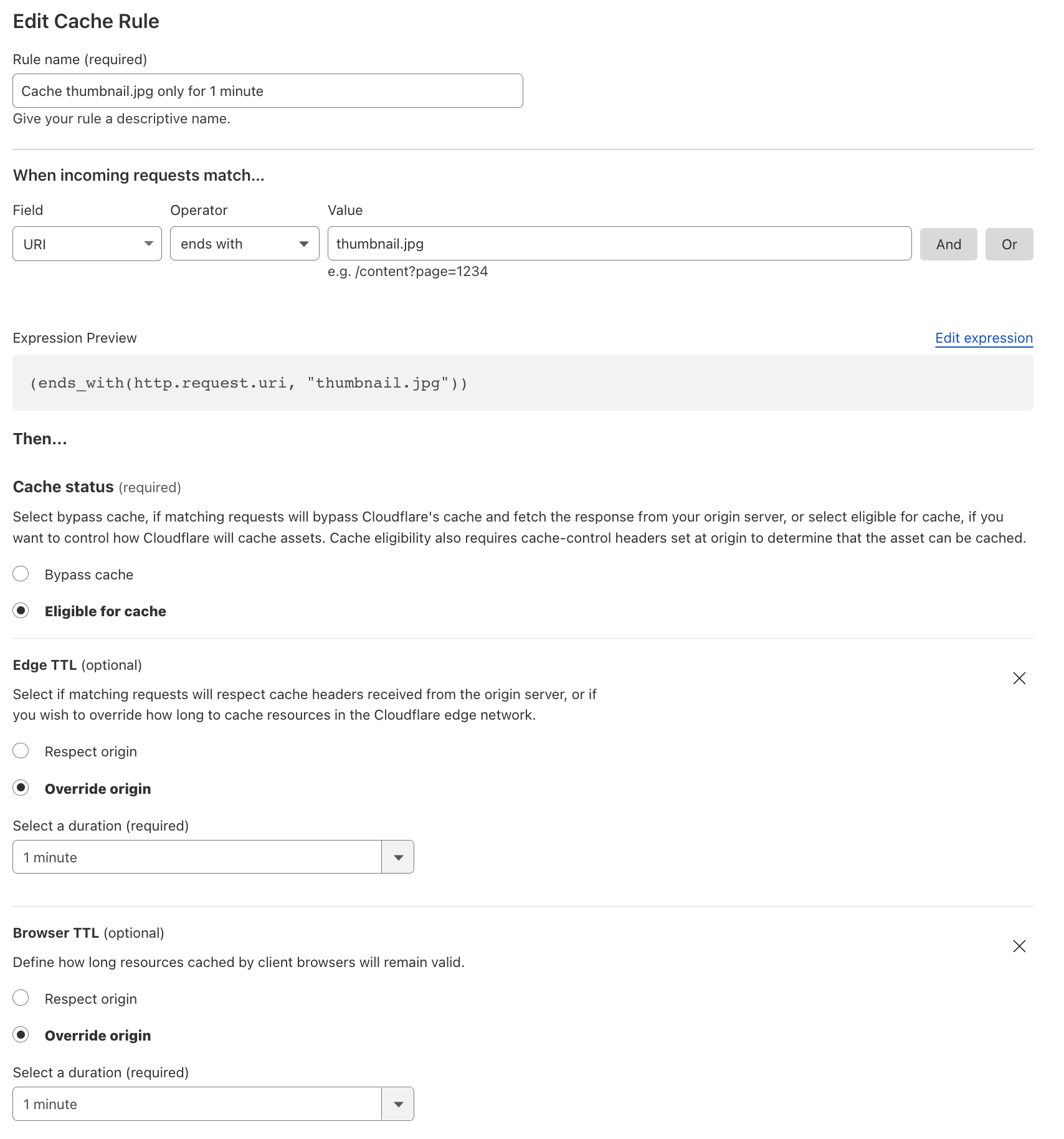

Configure cache rules, to allow / prevent caching

We need to tell Cloudflare how our content should be cached.

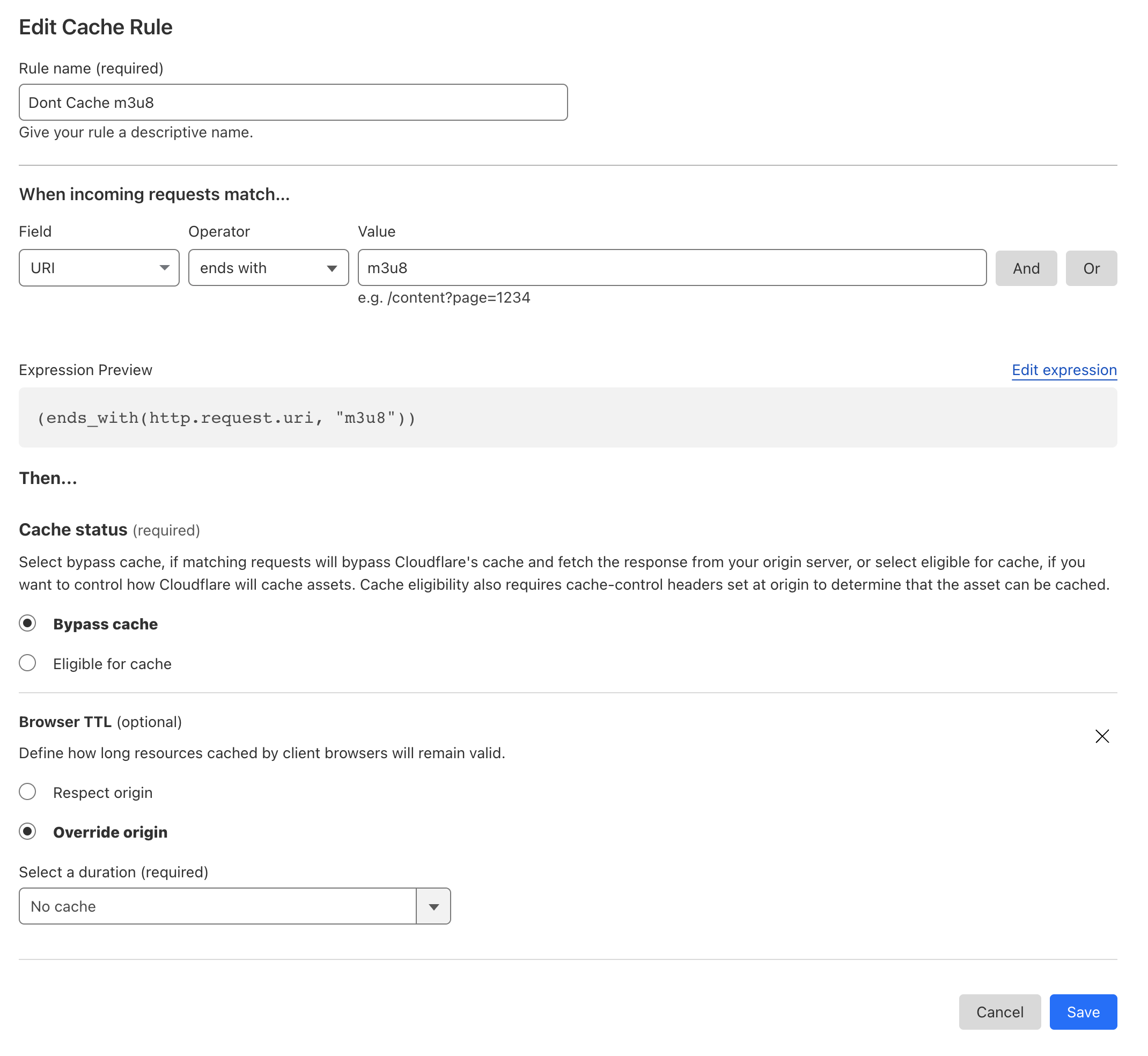

The playlist file

.m3u8is constantly updated that is why we have to disable caching in the CDN and in the Browser.The video files

*.tscan be cached, e.g. for 2 hours. Usually only a few minutes would be needed.If you want to use automatic thumbnail generation, you also need a short cache duration for

thumbnail.jpgfiles. -

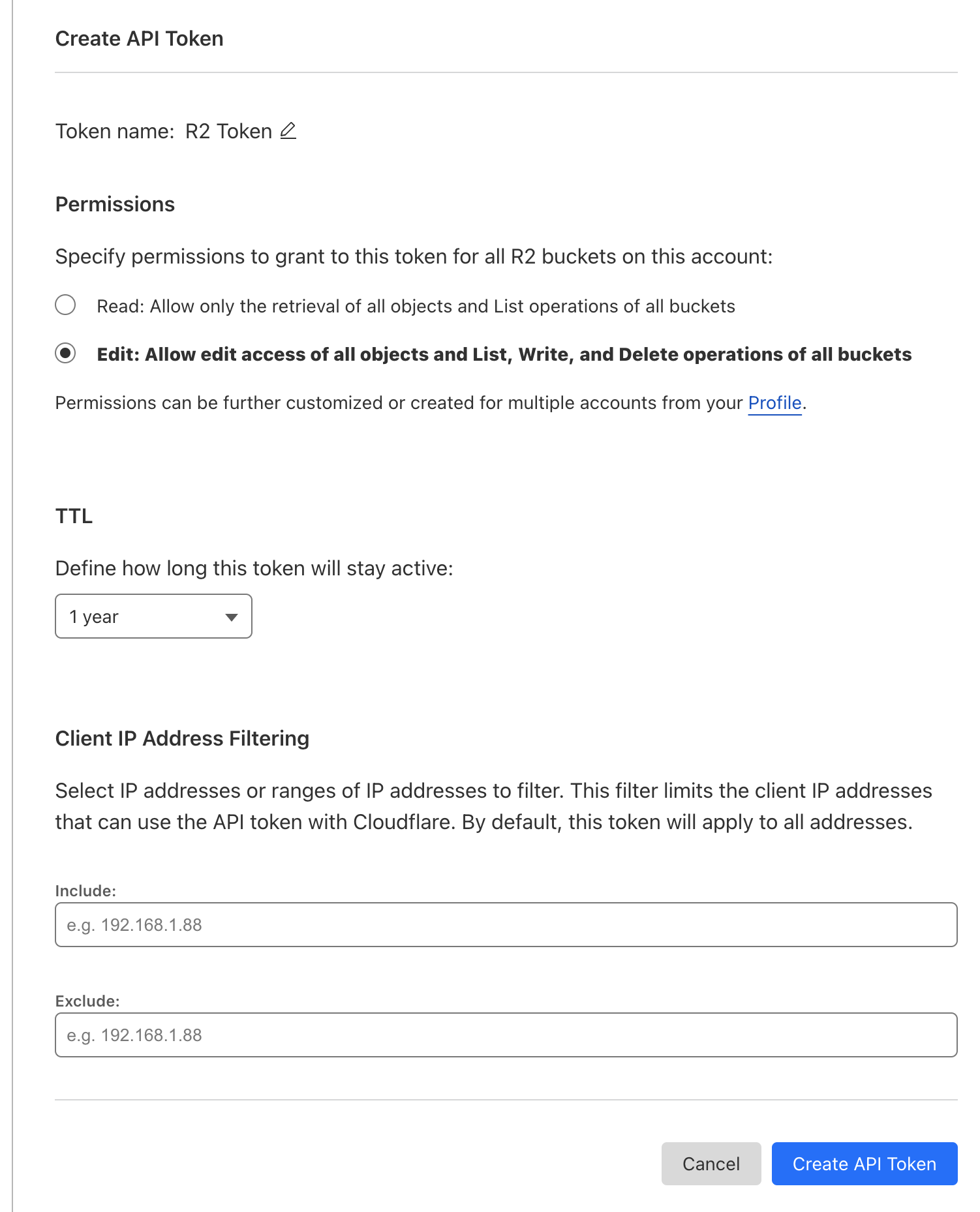

Create an API key for with Edit (read/write) access to your buckets under "Manage API Tokens" and "Create API Token". Note down it's ID and secret.

Now the Cloudflare setup is finished and we can continue to setting up the docker container that transfers the stream data to the bucket.

Environment

The API Key that we saved before (S3 credentials) needs to be given as environment settings

-

Endpoint for S3-compatible storage. Cloudflare uses an endpoint that contains the account ID.

S3_ENDPOINT=https://xxxxxxxxxxxxxxxxxxx.r2.cloudflarestorage.com -

Credentials for the S3 bucket to store the stream in.

S3_ACCESS_KEY_ID=xxxxxxxxxx S3_ACCESS_KEY_SECRET=xxxxxxxxxx S3_BUCKET_NAME=stream

Usage

Build the docker image

docker build -t srs-s3-upload .

The current srs setup in the environment file conf/mysrs.conf is copied into

the docker container and currently not changeable at runtime.

Environment settings that are visible in the .env.sample are configurable at runtime

through the docker environment or through a file called .env.

Run the docker image with the rtmp port accessible, for single testing in the foreground:

docker run -p 1935:1935 -it --rm \

-e "S3_ENDPOINT=https://xxxxxxxxxxxxxxxxxxxx.r2.cloudflarestorage.com" \

-e "S3_ACCESS_KEY_ID=xxxxxxxxxx" \

-e "S3_ACCESS_KEY_SECRET=xxxxxxxxxx" \

-e "S3_BUCKET_NAME=stream" \

srs-s3-upload

or as a background process:

docker run --name srs-s3-upload -d -p 1935:1935 \

-e "S3_ENDPOINT=https://xxxxxxxxxxxxxxxxxxxx.r2.cloudflarestorage.com" \

-e "S3_ACCESS_KEY_ID=xxxxxxxxxx" \

-e "S3_ACCESS_KEY_SECRET=xxxxxxxxxx" \

-e "S3_BUCKET_NAME=stream" \

srs-s3-upload

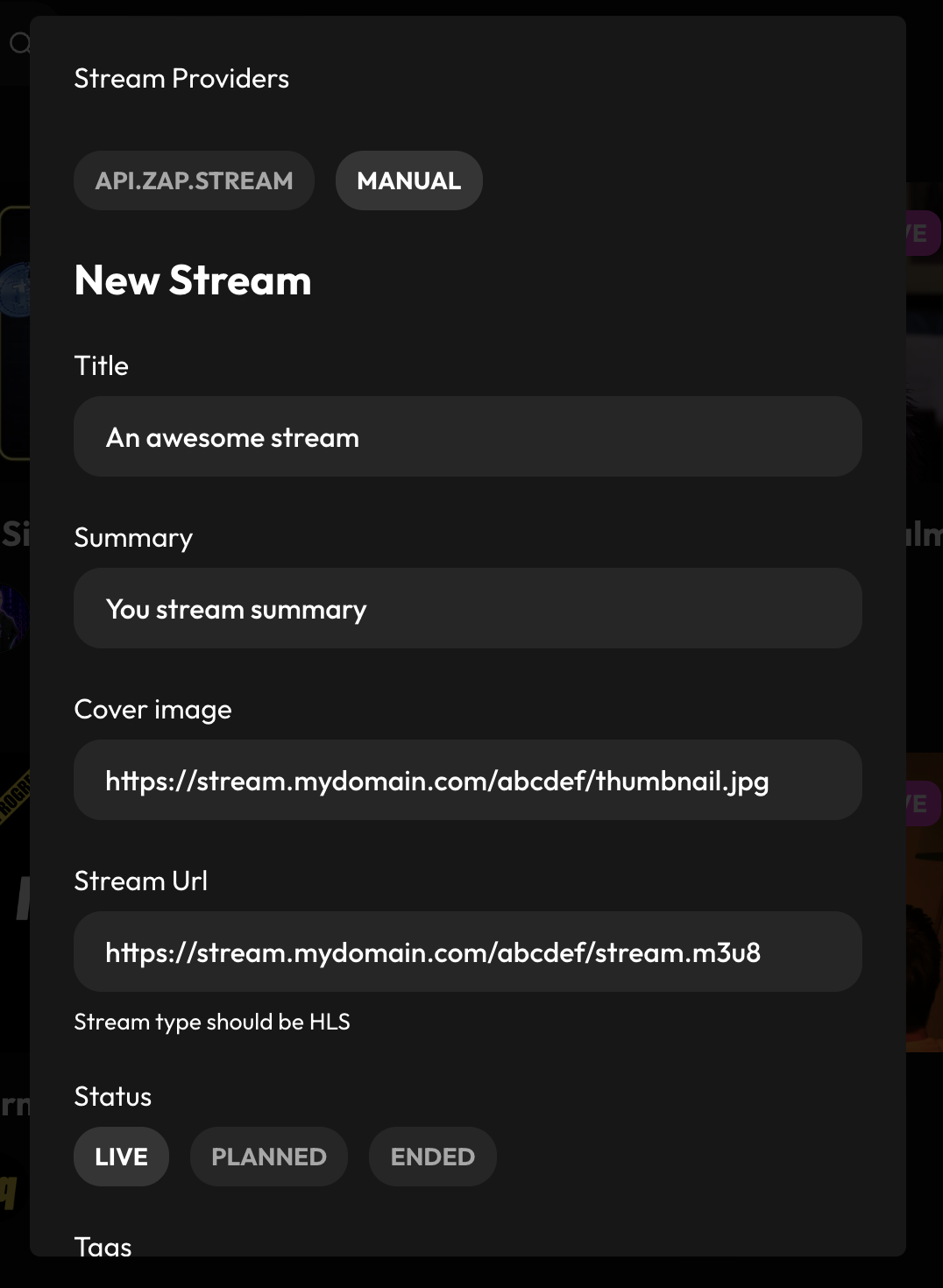

In a streaming application use the following settings, assuming the docker image is run on the local machine:

- Server:

rtmp://localhost - Stream Key:

abcdef(use any text you like)

When you start the stream, you will see the HLS data being uploaded to the S3 storage bucket.

- The stream will be accessible from the URL:

https://stream.mydomain.com/abcdef/stream.m3u8 - Generated thumbnails are at:

https://stream.mydomain.com/abcdef/thumbnail.jpg

The directory in the S3/R2 bucket will be created based on the stream key. It is recommended to change the stream key for each stream. This prevents issues with some video files being already cached.

Known Limitations

- Currently only streams with 1 camera and 1 format are supported.

- This upload/sync service needs to run on the same machine as SRS, since data is read from the local hard disk. This is the reason it currently runs in the same docker container.