| conf | ||

| docs | ||

| src | ||

| .dockerignore | ||

| .env.sample | ||

| .gitignore | ||

| .prettierrc | ||

| Dockerfile | ||

| entrypoint.sh | ||

| LICENSE | ||

| package-lock.json | ||

| package.json | ||

| README.md | ||

Overview

This project allows upload of HLS based streaming data from the SRS (Simple Realtime Server, https://ossrs.io/) to an S3 based storage. The purpose is to publish a stream in HLS format to a cloud based data store to leverage CDN distribution.

This project implements a NodeJs based webserver that provides a web hook that can be registered with SRS's on_hls webhook. Whenever a new video segment is created, this web hook is called and the implementation in this project uploads the .ts video segment as well as the .m3u8 playlist information

to the storage bucket.

To keep the bucket usage limited to a small amount of data, segments before a certain time frame (e.g. 60s) are automatically deleted from the bucket.

Configuration

Cloudflare Setup

This configuration assumes that Cloudflare is used as an storage and CDN provider. Generally any S3 compatible hosting service can be used.

-

First set up a Cloudflare Bucket, e.g.

streams -

Make the bucket publicly accessible, by connecting a domain name

-

When you have connected a domain name (and the proxy setting is selected in CloudFlare) the access is automatically cached by the CDN.

-

Set the CORS settings of the bucket to allow any client, i.e. host

* -

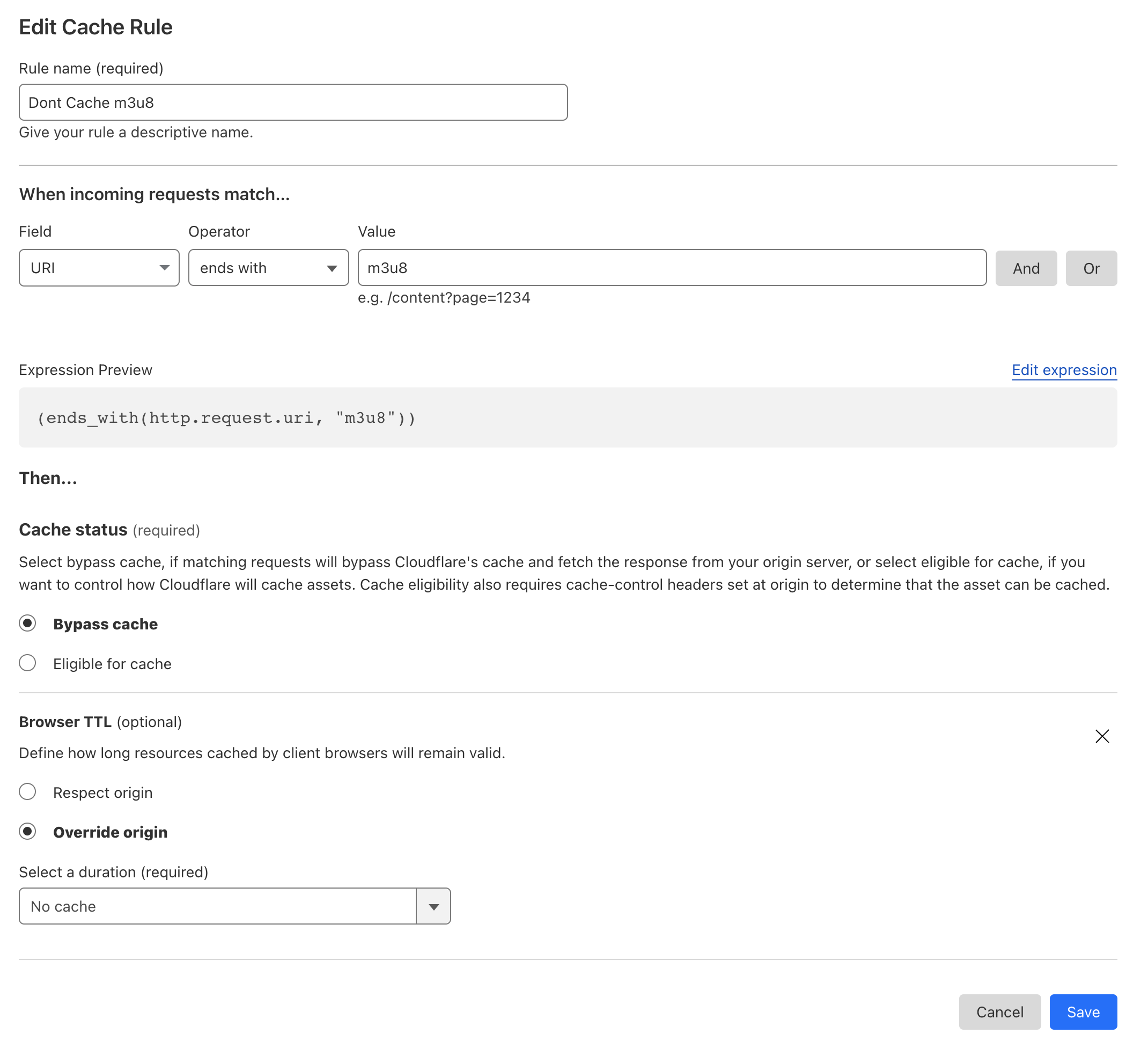

Configure cache rules, to allow / prevent caching

-

Create an API key for with read/write access to your buckets.

Environment

The S3 credentials need to be given as environment settings

-

Endpoint for S3-compatible storage. Cloudflare uses an endpoint that contains the account ID.

S3_ENDPOINT=https://xxxxxxxxxxxxxxxxxxx.r2.cloudflarestorage.com -

Credentials for the S3 bucket to store the stream in.

S3_ACCESS_KEY_ID=xx S3_ACCESS_KEY_SECRET=xxx S3_BUCKET_NAME=streams

Usage

Build the docker image

docker build -t srs-s3 .

The current srs setup in the environment file conf/mysrs.conf is copied into

the docker container and currently not changeable at runtime.

Environment settings that are visible in the .env.sample are configurable at runtime

through the docker environment or through a file called .env.

Run the docker image with the rtmp port accessible, for single testing in the foreground:

docker run -p 1935:1935 -it --rm \

-e "S3_ENDPOINT=https://xxxxxxxxxxxxxxxxxxxx.r2.cloudflarestorage.com" \

-e "S3_ACCESS_KEY_ID=xxxxxxxxxx" \

-e "S3_ACCESS_KEY_SECRET=xxxxxxxxxx" \

-e "S3_BUCKET_NAME=stream" \

srs-s3

or as a background process:

docker run --name srs-s3-upload -d -p 1935:1935 \

-e "S3_ENDPOINT=https://xxxxxxxxxxxxxxxxxxxx.r2.cloudflarestorage.com" \

-e "S3_ACCESS_KEY_ID=xxxxxxxxxx" \

-e "S3_ACCESS_KEY_SECRET=xxxxxxxxxx" \

-e "S3_BUCKET_NAME=stream" \

srs-s3

In a streaming application use the following settings, assuming the docker image is run on the local machine:

- Server:

rtmp://localhost - Stream Key:

123456(any text you like)

When you start the stream, you will see the HLS data being uploaded to the S3 storage bucket. The stream will be accessible from the URL: https://your.domain/123456/stream.m3u8

The directory in the S3 bucket will be created based on the stream key. It is recommended to change the stream key for each stream.

Known Limitations

- Currently only streams with 1 camera and 1 format are supported.

- This upload/sync job needs to run on the same machine as SRS, since data is read from the local hard disk. This is the reason it currently runs in the same docker container.