mirror of

https://github.com/huggingface/candle.git

synced 2025-06-20 20:09:50 +00:00

Module Docs (#2624)

* update whisper * update llama2c * update t5 * update phi and t5 * add a blip model * qlamma doc * add two new docs * add docs and emoji * additional models * openclip * pixtral * edits on the model docs * update yu * update a fe wmore models * add persimmon * add model-level doc * names * update module doc * links in heira * remove empty URL * update more hyperlinks * updated hyperlinks * more links * Update mod.rs --------- Co-authored-by: Laurent Mazare <laurent.mazare@gmail.com>

This commit is contained in:

@ -3,7 +3,11 @@

|

||||

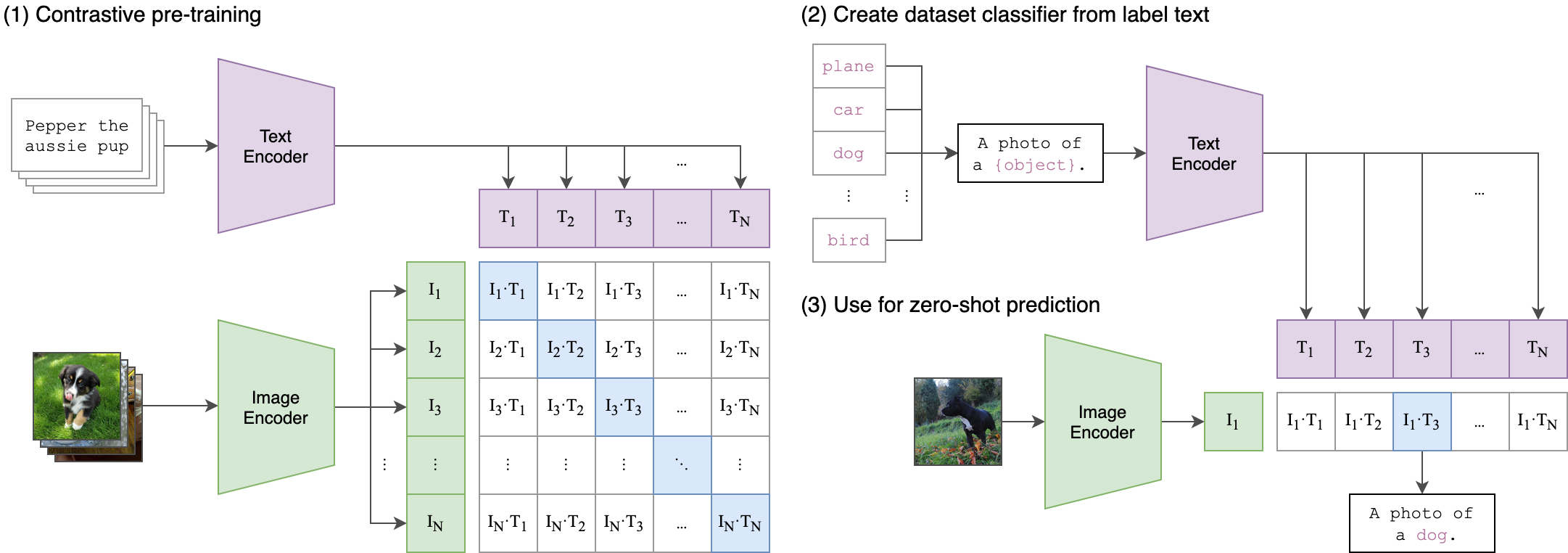

//! Open Contrastive Language-Image Pre-Training (OpenCLIP) is an architecture trained on

|

||||

//! pairs of images with related texts.

|

||||

//!

|

||||

//! - [GH Link](https://github.com/mlfoundations/open_clip)

|

||||

//! - 💻 [GH Link](https://github.com/mlfoundations/open_clip)

|

||||

//! - 📝 [Paper](https://arxiv.org/abs/2212.07143)

|

||||

//!

|

||||

//! ## Overview

|

||||

//!

|

||||

//!

|

||||

|

||||

pub mod text_model;

|

||||

|

||||

Reference in New Issue

Block a user